Research Projects on Reinforcement Learning

- Margin Trader: A Reinforcement Learning Framework for Portfolio Management with Margin and Constraints

- Reinforcement Learning for Traffic Signal Control

- Reinforcement Learning for Safety-enhanced Traffic Signal Control

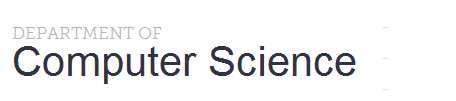

Margin Trader: A Reinforcement Learning Framework for Portfolio Management with Margin and Constraints

Margin Trader is proposed as a foundational and flexible Reinforcement Learning (RL) framework tailored for margin trading in the stock market. Our framework goes beyond the cash-only trading approach by incorporating margin account and constraints, thereby allowing traders to leverage their positions in both long and short directions. This integration provides a more realistic trading environment. Its primary objective is to strike a balance between profit maximization and risk management. To achieve it, two key modules are implemented: the Margin Adjustment Module timely updates the buying power to ensure traders’ maximum potential returns, and the Maintenance Detection Module protects the stability of the portfolio by prompt alerting traders when the margin level approaches critical points. Margin Trader supports various Deep Reinforcement Learning (DRL) algorithms and offers traders the flexibility to customize crucial settings for the trading environment. Traders can adapt their strategies to ever changing market conditions by fine-tuning equity allocation to long and short positions, manage risk tolerance levels by adjusting mainte- nance requirements, and cater to either conservative or aggressive trading styles based on their individual preferences by control- ling margin ratios. Margin Trader is versatile and can be extended to various financial markets, such as futures and cryptocurrencies.

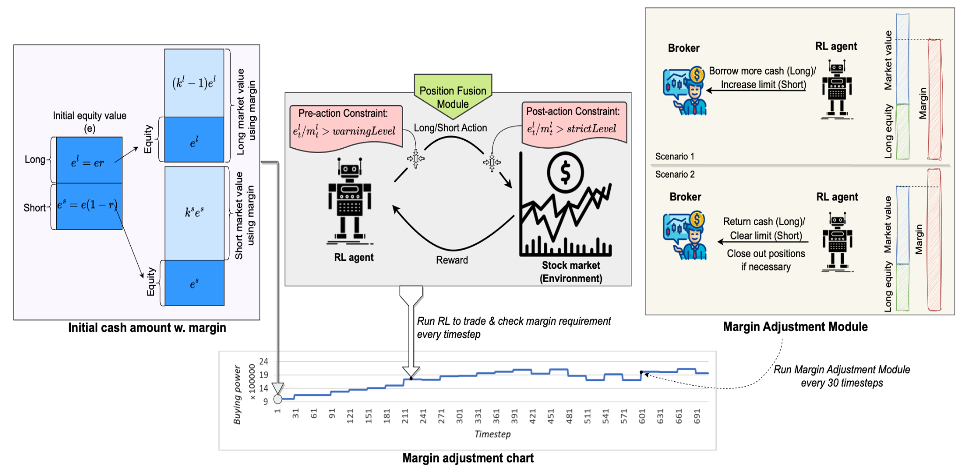

Reinforcement Learning for Traffic Signal Control

Existing inefficient traffic light cycle control causes problems such as long delays and waste of energy. The proposed deep reinforcement learning model uses real-time traffic information to dynamically adjust the traffic light duration. The model quantifies the traffic scenario as states by collecting traffic data and dividing the intersection into small grids. The duration changes of a traffic light are the actions, which are modeled as a high-dimension Markov decision process. The proposed model incorporates multiple optimization elements to improve performance, such as dueling network, target network, double Q-learning network, and prioritized experience replay.

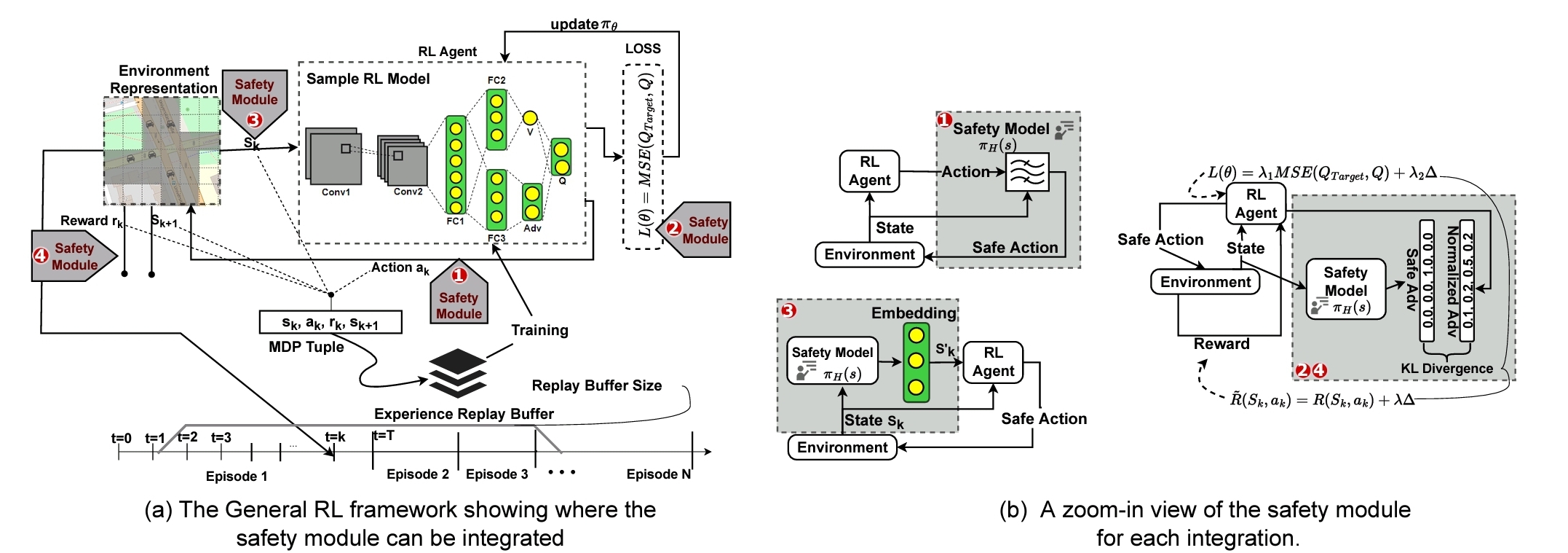

Reinforcement Learning for Safety-enhanced Traffic Signal Control

Traffic signal control is safety-critical, as a significant number of road accidents occur at intersections due to problematic signal timing. Existing studies on adaptive traffic signal control using reinforcement learning (RL) technologies have focused on minimizing traffic delay but have neglected the potential exposure to unsafe conditions. The proposed SafeLight method incorporates road safety standards as enforcement to ensure the safety of RL-based traffic signal control methods, aiming towards operating intersections with zero collisions. SafeLight employs multiple optimization techniques, such as a multi-objective loss function and reward shaping, to integrate safety into RL models and improve both safety and mobility. Extensive experiments using synthetic and real-world benchmark datasets show that SafeLight significantly reduces collisions while increasing traffic mobility, achieving over 99% reduction in collisions compared to the backbone RL model and about 30% lower average waiting time than the Fixed-time control.